The U.S. Supreme Court made a major decision on free speech and social media, choosing not to hear Robert F. Kennedy Jr.’s legal challenge against tech giants like Meta and Google. The case, which centered around allegations that these platforms censored Kennedy’s vaccine-related content due to government pressure, was rejected by the Court in late June, leaving the lower court ruling in place. But what does this mean for the average user and the broader debate on content moderation? Here’s a breakdown of the case, the ruling, and its far-reaching implications.

Supreme Court Rejects RFK Jr.’s Free Speech Challenge

While RFK Jr.’s legal challenge may not have made it to the Supreme Court, the issue of online speech is far from resolved. The case shows that private companies are well within their rights to moderate content, but it also emphasizes the need for clear evidence before any government influence over censorship can be proven.

As platforms like Meta and YouTube continue to navigate the fine line between content moderation and censorship, it remains to be seen how the legal landscape will evolve. For now, this ruling means users will continue to interact in a space where corporate policy often shapes the content they see—and what they are allowed to say.

The Case at Hand: RFK Jr.’s Fight Against Big Tech

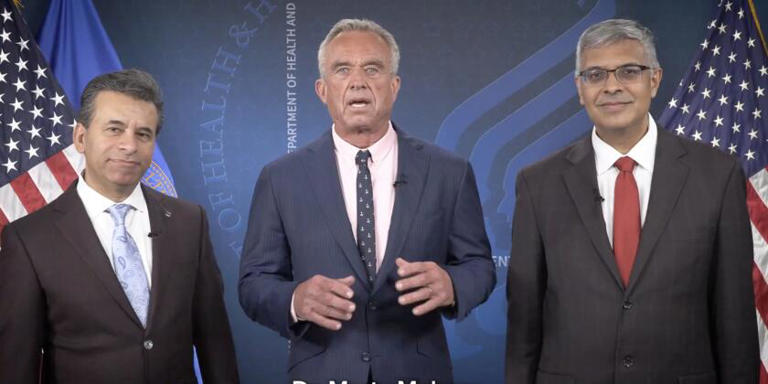

Kennedy, the founder of Children’s Health Defense, a group that has been vocal in its opposition to certain public health policies, filed a lawsuit in 2023. He alleged that platforms like Facebook (Meta) and Google’s YouTube had silenced his posts on vaccine-related issues, not based on their own guidelines, but because of pressure from government officials. His team argued that these actions violated the First Amendment by infringing on free speech.

However, courts at the district and appellate levels ruled against him, citing that there was no conclusive proof of “state action.” Essentially, the courts found no direct evidence that the government had unlawfully coerced these platforms into censoring specific content. Despite this, Kennedy’s legal team appealed to the Supreme Court, hoping for a reversal.

In the end, the Supreme Court’s decision to decline to take the case confirmed the lower court’s ruling, leaving Kennedy and his legal team without a victory. The decision, which was expected, highlights significant questions about government influence over private platforms and the limits of First Amendment protections in the digital age.

Why the Court Rejected the Case

The refusal to hear the case was largely based on the Court’s view that there was insufficient evidence of government involvement in the censorship process. In its decision, the Supreme Court upheld the lower courts’ findings that while government officials had made requests to platforms like Meta, there was no clear indication that these requests amounted to unconstitutional government action.

In legal terms, the case revolved around whether the actions of private companies—such as Facebook and Google—could be seen as government censorship. The First Amendment protects individuals from government-imposed censorship, but private companies, as the Court ruled, can still make decisions on what content they allow or remove from their platforms based on their own policies. This decision reaffirms the legal principle that tech companies retain the right to control content on their platforms under their own terms and conditions.

A Broader Debate on Section 230 and Online Moderation

The ruling also touches on the ongoing debates over Section 230 of the Communications Decency Act, which shields platforms from liability for content published by users. Critics argue that Section 230 allows platforms to avoid responsibility for harmful content, including misinformation. As lawmakers debate the future of Section 230, decisions like this one fuel calls for stricter regulations or a complete overhaul of online content laws.

Some experts believe the Supreme Court’s refusal to hear the case signals a broader reluctance to interfere in the regulation of online spaces unless there is clear evidence of illegal actions, like direct governmental control over content moderation. Others, however, warn that this case signals that private companies will continue to hold substantial power over speech in online spaces—without the legal oversight some believe is needed.

What Comes Next?

Kennedy’s team has not ruled out future legal action. If more concrete evidence emerges that the government directly influenced platforms to censor speech, it could prompt a fresh legal battle. Similarly, public pressure and potential changes to the laws governing tech companies—such as revisiting Section 230—may continue to shape the future of online content moderation.

For now, the Court’s decision serves as a reminder that the balance between freedom of speech, government influence, and corporate power remains a complicated and evolving issue. As social media platforms continue to play a critical role in public discourse, the question of who controls online speech—and to what extent—is far from settled.